In Part 1 of this micro-series we mentioned 6 observations about indicators and measurements in general, with a focus on some common difficulties. In this installment, we will look at several points relating specifically to the measurement of innovation. This is in no way intended to be a comprehensive treatment of the subject, but I will try to cover some useful points.

Let’s start with the question of the necessity and viability of measuring innovation. When we worked with Tal Givoly, at the time Chief Scientist of the Israeli software company Amdocs, he used to say: “Management is so interested in innovation, that it plants a seed and then pulls it out of the ground every day to see how its roots are growing.” I like to quote him to managers as a call to caution, not only in meddling with innovation but also on trying to monitor it too closely. Still, the opposite approach is no less misleading. According to this, innovation is a magical phenomenon, a spark that one cannot ignite at will, let alone measure, and any attempt at doing so will result in extinguishing the fire. This is obviously misguided since, without measuring the results of your innovation efforts there is a slim chance that you will receive the funding and support to sustain them beyond the initial burst of enthusiasm that started the drive.

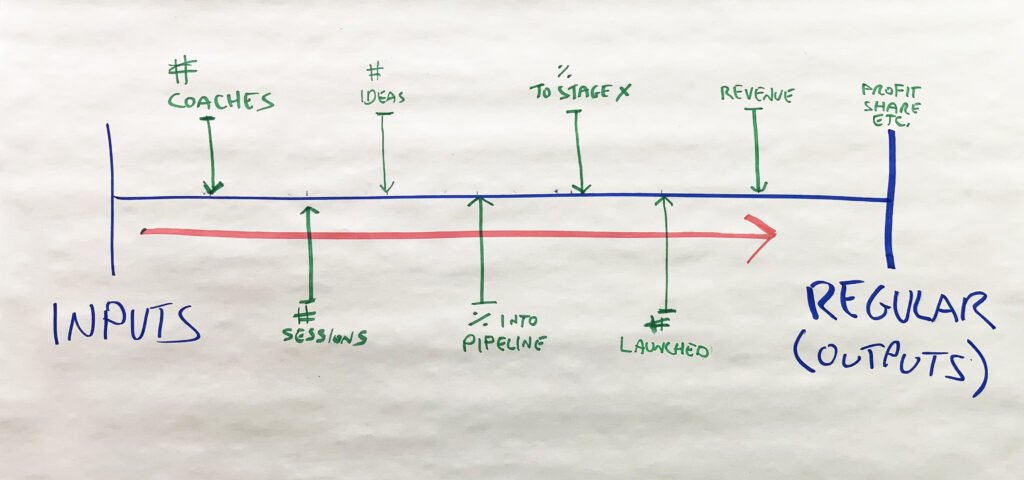

Contrary to the “mystical” attitude, we tend to say, with only a bit of simplification, that innovation is like any other business process and therefore should be measured just as you would, say, your sales or efficiency efforts. So, assuming that innovation must be monitored and measured, there are two basic intuitions as to how this should be conducted. The first stems from the following logic: one’s motivation to be innovative is the wish to achieve the organization’s goals (see our post on the definition of innovation) and therefore these very goals, as expressed by their corresponding indicators, can adequately assess the value of our efforts to innovate. If we launch an innovation drive which does not result in an impact on our regular business indicators, then we are wasting our time and money. Therefore, the only indicators that an organization requires in order to monitor and evaluate its innovation efforts are those that are used to measure its performance anyway.

The appeal of this approach is that it both simplifies the measurement process and ties the innovation efforts inextricably to the company’s goals. Unfortunately, it has two grave shortcomings: 1) it is very hard to isolate the influence of your innovation efforts from the myriad factors that influence business results (factors both external – a pandemic, say, or internal – flaws in a product, or unwise allocation of resources); 2) even when you can isolate the contribution of your innovation activities, their influence on business goals will be evident only after several months, or even years. So how do you monitor your activities and make decisions as you go along, if their effects will appear only, say, within 6 months?

These difficulties often lead innovation leaders to adopt the opposite approach: since it is virtually impossible to isolate, especially in real time, the output of innovation efforts, it is seen as wiser to simply measure inputs. You hatch your plans for innovation, define specific activities, and closely monitor their delivery and implementation.

This is indeed a much more practical approach, which lends itself easily to project management and real time decision making. The only trouble is that you may find yourself beautifully and efficiently implementing a useless plan, that does not translate into any important benefit.

Our suggestion is, therefore, to create a dynamic “sliding scale” approach to measuring innovation activities, which I will demonstrate in the following scenario (derived, with some generalizations and simplifications, from our work with various companies and organizations).

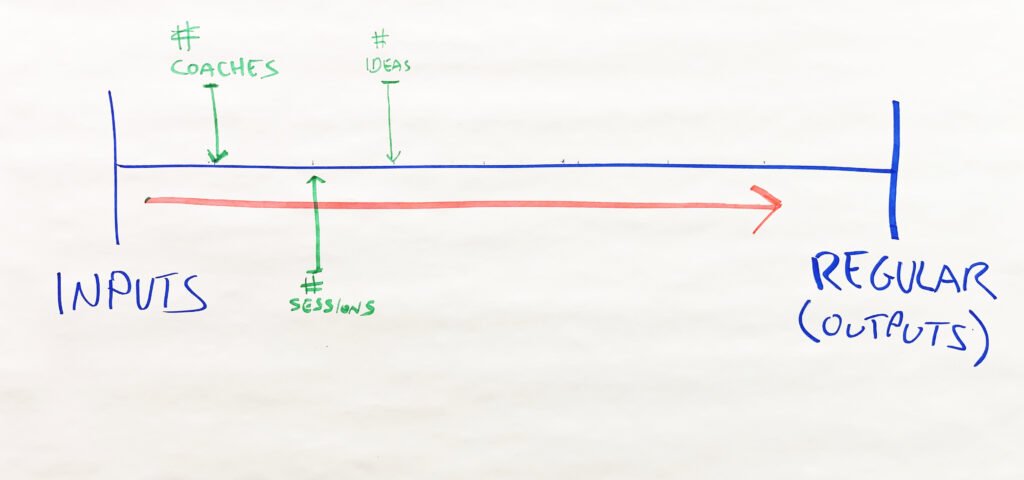

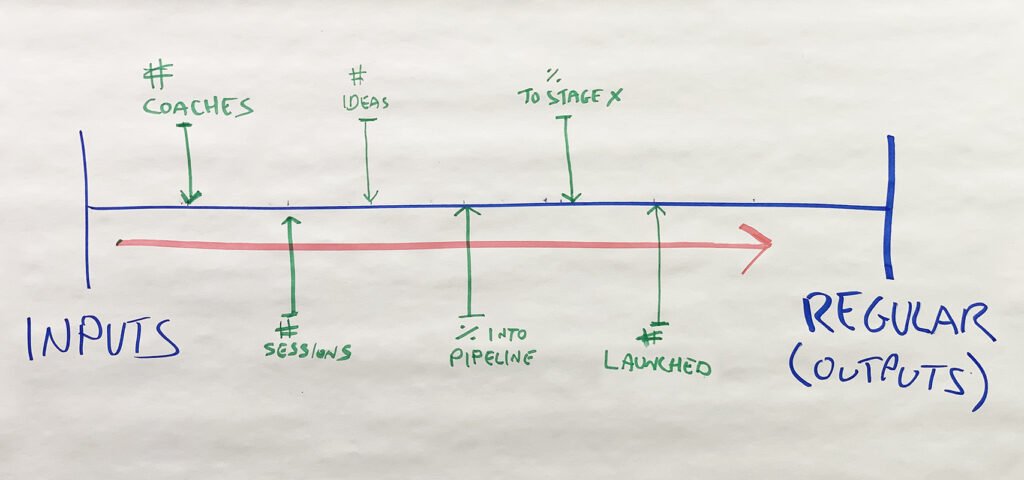

Imagine that you have decided to train 400 of what we call “Innovation Coaches” (in a forthcoming post, we will describe the design, deployment and management of such a coach community. Click here to receive a notification when the post comes out). Their goal is to promote innovation throughout the organization through the facilitation of innovation sessions. The first indicator will therefore be: number of coaches trained. Since you had reached the conclusion that there was a need for 400 coaches, you want to make sure that they are trained as planned. Not a trivial task, given both the logistic requirements and the HR challenges.

But, of course, training a large and increasing number of coaches can end up being a huge waste of time and resources, unless they are delivering results, so, upon completion of the training of your first batch of, say, 16 coaches, you must immediately start measuring the next indicator: number of sessions delivered per coach. This helps assess the cumulative effect of the coaches on the organization, and along the way gives you some insight on the percentage of active coaches out of those who have undertaken the training (if less than 80% are somehow active, we recommend you review either the quality of the training or the trainees’ selection process, or both).

Obviously, as you celebrate a hopefully growing number of sessions, you may only be causing an even greater waste of people’s time and therefore your company’s resources. All depends on what they are actually achieving in their sessions, which is extremely difficult to assess, given that the impact of the participation in a session on a participant’s brain and behavior is extremely difficult to measure. You can therefore resort to a simplified proxy, the most practical and direct you can measure, which is the number of ideas produced in a session, to assess its productivity.

These are only the first three steps in an ongoing process. But how should this process be timed? How soon should one move from one indicator to the next? The proper answer to these questions is crucial to the success of the entire enterprise, not only of the measurement itself, but also the actual results, since the mere act of measurement exerts, as we all know, a strong influence on the measured activities. Say that a coach has graduated from her training. She is keen on trying out her new skills, but, on the other hand, she is a bit hesitant about having to stand up and lead her peers, or maybe even her superiors, through an exercise that has less than a 100% probability of success. She may also have to contend with a lack of support from her boss who is complaining that she is behind schedule in her regular work. The knowledge that someone is counting the number of sessions she runs can nudge her in the right direction, together with, perhaps, an extra carrot, such as the promise that any coach who facilitates, say, 6 sessions in the first two months is eligible for participation in an advanced training. So, counting the number of delivered sessions is probably a good idea immediately after coaches’ graduation from their training. But caution! In this fragile stage, counting the number of ideas produced in the session is nearly always premature, and most likely will significantly decrease the number of sessions and lower the percentage of active coaches! “Now, I don’t only need to add to my workload, argue with my boss, stand in front of not-always-collaborative colleagues and risk the embarrassment of wasting everyone’s time in what could turn out to be a useless session, they also want to monitor the number of ideas that came out? I’ll need to spend time documenting and reporting, and then find myself being scolded because they came to the conclusion that my session hadn’t been productive enough?”. Time and again we see that when management pushes to measure session results too early, coaches react either by lowering the number of sessions they facilitate, or by conducting them without reporting, which, in terms of organizational evaluation, amounts to the same thing. This phenomenon emphasizes two key dilemmas in measuring innovation activities:

- What you measure doesn’t only affect what you know but also what happens to those you are measuring;

- The more data you collect about an activity, and the more you tend to impose reporting efforts on the actors, the less time, energy and motivation they will have to do the actual work.

We strongly recommend, therefore, that you allow coaches to feel confident with conducting sessions, hopefully even enjoying the process, before you start monitoring the sessions’ outcomes. But, at some point, this must happen, and as you start counting the number of ideas produced per session, the next question arises. As we have all painfully learned, mainly thanks to the inefficacy of Brainstorming, quantity of ideas is not only not a guarantee of quality but can also become a burden and an energy drain. Which is why the next indicator is required: % of ideas from a session that made it into the company’s idea-development pipeline. Subsequently, you can select one or more stages of your development process and count the ideas that achieve each one or jump to the next important milestone: number of ideas launched into the market (or, if your output is not products into market, the equivalent measure such as processes changed, services offered, social programs launched, etc.).

Using this same logic, the sliding scale for measuring the results of your innovation efforts can be extended according to your organization’s process of product development, or project management. Common additional stages that can be added to the scale are revenues, profits, savings or market share obtained through the launch of a product or initiative, thus converging what started out in one extreme pole as a measurement of pure inputs into the opposite pole of relying on those indicators that monitor your organization’s goals and objectives.

Note that the approach described here attempts to address some of the pitfalls and follow some of the guidelines mentioned in my former post on the subject:

- Be practical by starting superficially, but do not be daunted by the difficulties and insist on going further and deeper;

- Consider the needs both of those who are doing the measuring and those who are being measured;

- Remember that measuring is an invasive process that affects the measured for good and bad, and timing can crucially determine which one it is;

- Consider carefully what you decide to measure, and be prepared to defend the rationale of its importance;

- Be transparent about the results, whatever they end up demonstrating.

In a future post, we will describe how this same approach can be expanded to apply to organizational innovation, rather than to a specific activity, by breaking up the large organizational effort into what we call The 7 Elements of Organizational Innovation. Meanwhile, we recommend that you try to apply the sliding scale approach to one or several of your innovation activities. And we would love to hear how it goes and what you learn.