On June 30th, I posted about the meaning of the term “innovation”. These were the post’s opening sentences:

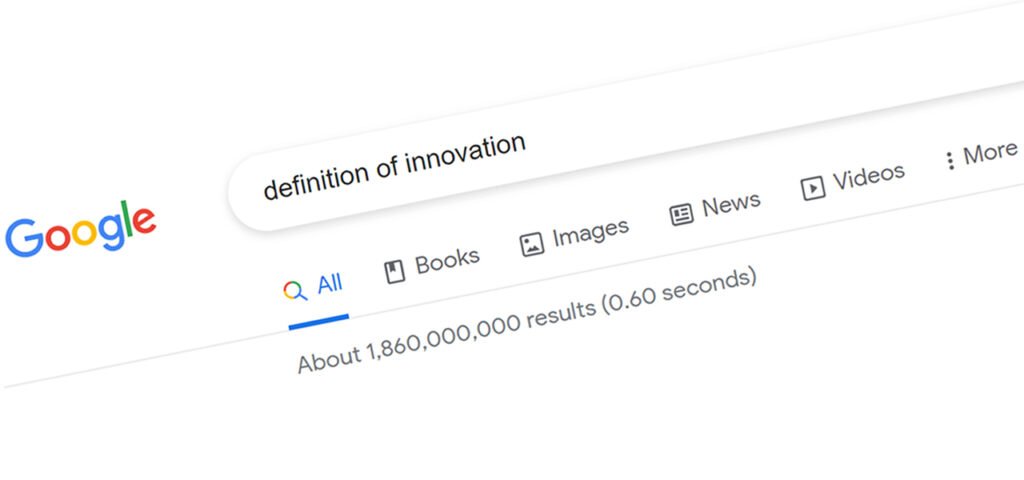

When I typed “definition of innovation” into Google the other day, I came up with 1,710,000,000 replies, which means absolutely nothing, of course, raising the question why the arguably most influential company on earth has been consistently feeding us with this useless piece of data for so many years. So, I promise to use this very same opening sentence in a future post (repurposing, saving on first-sentence-emissions) about some common popular fallacies around indicators and measurement.

Keeping my promise here, I reiterate my bewilderment and will attempt to use this example to shed some light on the oft-asked question: how should innovation be measured? This question came up yet again last week in a conversation with an Innovation Manager in a medium-sized financial company. “They [management] are demanding that we boost innovation throughout the company when I don’t even know what innovation is nor have any idea how to measure it.” The former question was addressed by the post mentioned above. In this post (both parts) we will address the latter.

Here, in Part 1, we will start by using the example of Google’s indicators to share some observations on what should or shouldn’t be done when measuring performance in general. In Part 2, we will analyze some specifics of measuring in the context of innovation.

As I write this, I repeat my search for “definition of innovation”. Why does Google choose to share with me that my search took 0.60 to complete and that it came up with 1,860,000,000 results? Why would I care? What can I do with these two pieces of information? One can imagine that in the late 1990’s this pair of indicators served to signal both “look how fast our engine is”, and “see how many results we can provide you with”. Even then, there was a glaring discrepancy between the search engine’s stated differentiator and the indicators communicated. Google claimed the superiority of its algorithm based on the quality of its results, not their quantity nor the speed of their delivery, so why were they boasting characteristic #1 while measuring #2 and #3? This is the result of my repeated search today:

Keen readers will have noticed that the number that appears above constitutes an increase of 150,000 finds over my former search, quoted in the opening paragraph. Say that Google has indeed found 150,000 additional hits in less than a month. So what? Have I gained anything from this increase? Obviously not. Clearly, then, these indicators have not been selected to cater to my needs as seeker of information. Why were they selected then? One suspects that a possible reason is that they are simply easy to measure. So, the first observation on the misleading use of indicators is:

Observation #1: People and organizations tend to skew towards indicators that are easy to measure.

Ben Gomes, Google’s Vice-President of Engineering, has been quoted as saying, “…our goal is to get you the exact answer you’re searching for faster.” He goes on to explain: “Our research shows that if search results are slowed by even a fraction of a second, people search less (seriously: A 400ms delay leads to a 0.44 percent drop in search volume, data fans).”

Note that although the professed goal is to provide an “exact answer” there is no indicator to measure to what extent this important measure has been attained. This lacuna may be attributed either to a harsher variant of Observation #1 (above):

Observation #1*: If you haven’t figured out how to measure something, disregard it (even if you know it is crucially important).

Or, in more unfortunate cases:

Observation #2: If you’ve figured out how to measure it, but you’re not happy with the results, hide them.

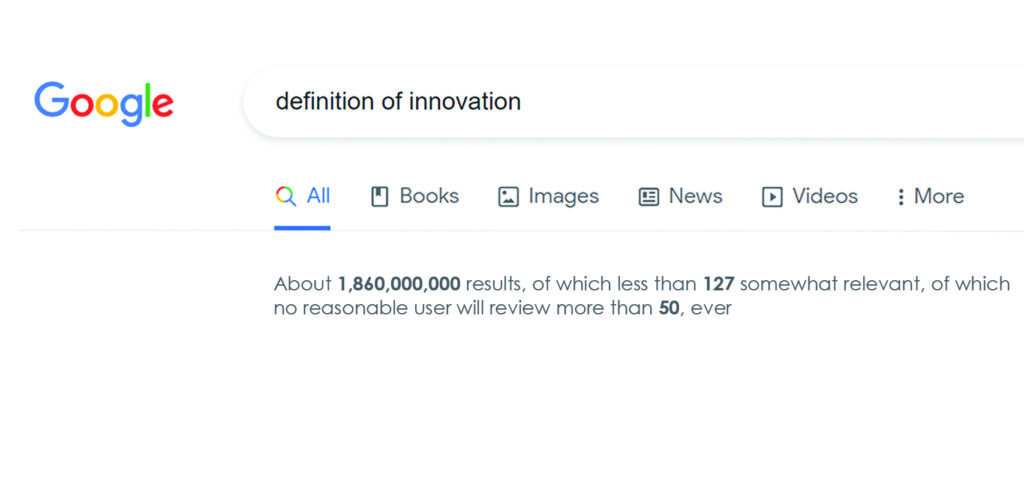

For obvious reasons, we don’t expect Google to show us a search result that looks like this:

But wait, you may say, the Google VP did explain the huge importance of speed, so that is a crucial factor that should be measured. Yes, it may be crucial, but for them, rather than for you. This, then, is a prime example of:

Observation #3: Technologists and bureaucrats will tend to measure and communicate indicators that are important for them, rather than for their users/clients.

It is, no doubt, of the utmost importance for Google’s techies to measure and monitor the precise duration of each and every one of the 5.4 billion(!) daily searches (2020) on their awe-inspiring platform. But that does not at all mean that this specific piece of data about my search is of any interest to me. Ask yourself: Have you ever noticed this number? If I tell you that your search x took 0.36 seconds or your search y an agonizing 0.72, would these numbers mean anything to you? It is a stretch to imagine that Google, with its 135,301 employees and 182,527 billion US$ revenue (both numbers refer to Alphabet, end of 2020) can’t figure out that these two indicators are respectively useless and meaningless to their users. Why then, do they appear so prominently? Another possible explanation could be:

Observation #4: If you prefer to avoid sharing certain indicators, direct the spotlight to others.

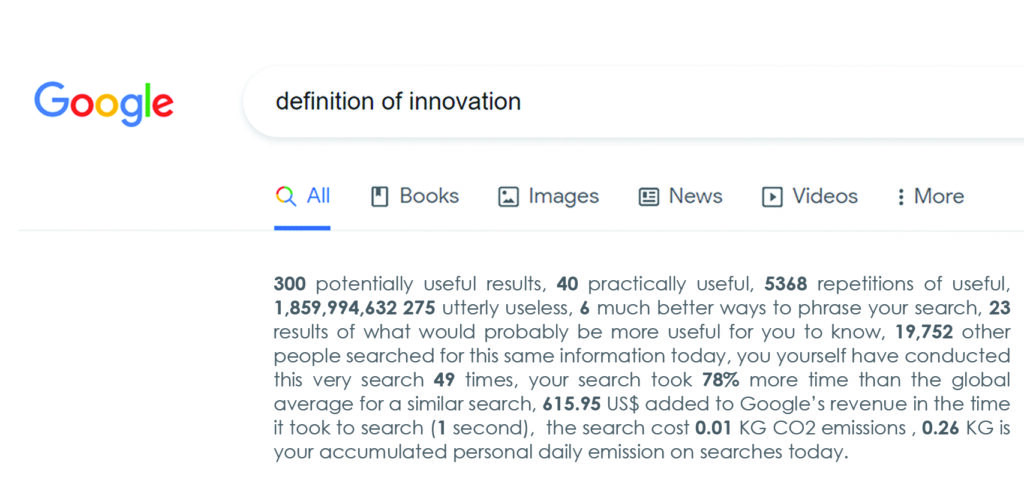

This may be the most sensible explanation of the Google indicator puzzle: speed and quantity may actually be playing the role of what I propose to name “decoy indicators”. The function of this class of indicators is to enable a company to offer a semblance of transparency, while in fact obfuscating all those indicators that its audience could really be interested to monitor but is not even aware of. The Google-search-indicator-set of our dreams would maybe include some or all of these:

A majority of these indicators would probably be as useless to most of us as the two original ones, but would at least supply a welcome variation on the theme, or perhaps could be rotated throughout searches so that the user would either select those she wished to see or would be served a random selection of 2-3 each time. The other indicators could, say, be accessed by clicking on an icon. Does it really matter which indicators are selected? It could, very much.

Nine years ago, when we took our 3-week old youngest daughter to our favorite pediatrician for a small check-up, he noticed a minor irregularity and prepared to perform a small but invasive test on her. I stopped him and asked why he was planning to do so. He answered that it was “the protocol” and would supply him with a piece of important data which he would register in her newly created computer file. When I asked whether the value of this data would determine any specific action to be taken, he admitted that it would not. I therefore asked him not to perform the test to which he immediately agreed. This incident re-confirmed a rule I strictly follow in all my dealings with medical staff to the chagrin of many of them: always politely request that they provide a simple rationale for whatever action they are about to perform. Surprisingly, very often they are not able to do so, apart from quoting “the protocol”. The corollary of this rule for medical tests or examinations, as for measurements in general is:

Observation #5 When you select or design an indicator, make sure you know precisely what you want to know and why.

Even if the price of measuring and communicating a useless indicator is low (even lower than the minimal invasion of my daughter), you would do best to avoid it, as indicators tend to take on a life of their own by bestowing undue importance on the measured quantity.

Observation #6: Indicators, even if selected for the wrong reasons, and therefore useless, will appear important because they’re there.

And, once the indicators are there, you will find yourself playing the corporate-Edmund-Hilary and inevitably climbing the management-imposed-Himalaya, diverting energies from other, more constructive endeavors. This is one of the reasons that we all at times experience a certain unease even as we are exceling according to some set of indicators or other. Deeply, intuitively, beneath our superficial satisfaction at hitting our numbers, some voice is asking: but what for?

In the next post (Part 2) we will discuss how these phenomena play out in organizations’ attempts to measure their level of innovation, as they use indicators such as number of ideas submitted to idea-boxes, number of patents, % of revenues spent on R&D, % of sales derived from new products and other commonly used indicators. We will see that many such standard indicators can be applied usefully, depending on timing and context, and will review several examples, exploring how they can be constructively put to good use.